Difference between revisions of "Vision-based Navigation and Manipulation"

From Robot Intelligence

Kistvision (Talk | contribs) (→Concept) |

Kistvision (Talk | contribs) (→Related papers) |

||

| (15 intermediate revisions by the same user not shown) | |||

| Line 2: | Line 2: | ||

==Concept== | ==Concept== | ||

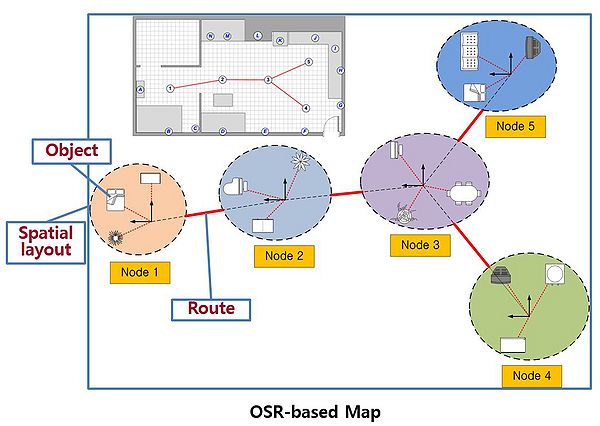

* As a map representation, we proposed a hybrid map using object-spatial layout-route information. | * As a map representation, we proposed a hybrid map using object-spatial layout-route information. | ||

| − | * | + | * Our global localization is based on object recognition and its pose relationship, and the local localization uses 2D-contour matching by 2D laser scanning data. |

* Our map representation is like this: | * Our map representation is like this: | ||

::[[File:map-repres.jpg|600px|left]] | ::[[File:map-repres.jpg|600px|left]] | ||

| − | + | <br/><br/><br/><br/><br/><br/><br/><br/><br/><br/><br/><br/><br/><br/><br/><br/><br/><br/><br/><br/> | |

* The Object-based global localization is as follows: | * The Object-based global localization is as follows: | ||

| + | ::::::[[File:GL.jpg|500px|left]] | ||

| + | <br/><br/><br/><br/><br/><br/><br/><br/><br/><br/><br/><br/><br/><br/><br/><br/><br/><br/> | ||

| + | <br/><br/><br/><br/><br/><br/><br/><br/><br/><br/> | ||

==Related papers== | ==Related papers== | ||

| − | + | * S Park, ''Sung-Kee Park'', "Global localization for mobile robots using reference scan matching," International Journal of Control, Automation and Systems 12 (1), 156-168, 2014. | |

| − | + | * S Kim, H Cheong, DH Kim, ''Sung-Kee Park'', "Context-based object recognition for door detection," Advanced Robotics (ICAR), 2011 15th International Conference on, 155-160, 2011. | |

| + | * S Park, ''Sung-Kee Park'', "pectral scan matching and its application to global localization for mobile robots," Robotics and Automation (ICRA), 2010 IEEE International Conference on, 1361-1366. 2010. | ||

| + | * S Park, S Kim, M Park, ''Sung-Kee Park'', "Vision-based global localization for mobile robots with hybrid maps of objects and spatial layouts," Information Sciences 179 (24), 4174-4198, 2009. | ||

| + | <br/> | ||

=Unknown Objects Grasping= | =Unknown Objects Grasping= | ||

==Concept== | ==Concept== | ||

| + | * With a stereo vision(passive 3D sensor) and a Jaw-type hand, we studied a method for any unknown object grasping. | ||

| + | * In the context of perception with only one-shot 3D image, three graspable directions such as lift-up, side and frontal direction are suggested, and an affordance-based grasp, handle graspable, is also proposed. | ||

| + | * Our experimental movie clip : https://www.youtube.com/watch?v=YVfTltLy2w0 | ||

| + | * Our grasp directions are as follows: | ||

| + | ::[[File:grasp directions.jpg|500px|left]] | ||

| + | <br/><br/><br/><br/><br/><br/><br/><br/><br/><br/><br/><br/><br/><br/><br/><br/> | ||

| + | * The schema of our whole grasping process is like this: | ||

| + | ::[[File:grasp-sche.jpg|800px|left]] | ||

| + | <br/><br/><br/><br/><br/><br/><br/><br/><br/><br/><br/><br/><br/> | ||

==Related papers== | ==Related papers== | ||

| + | *RK Ala, DH Kim, SY Shin, CH Kim, ''Sung-Kee Park'', A 3D-grasp synthesis algorithm to grasp unknown objects based on graspable boundary and convex segments," Information Sciences 295, 91-106, 2015 | ||

Latest revision as of 21:17, 26 May 2016

Contents

Concept

- As a map representation, we proposed a hybrid map using object-spatial layout-route information.

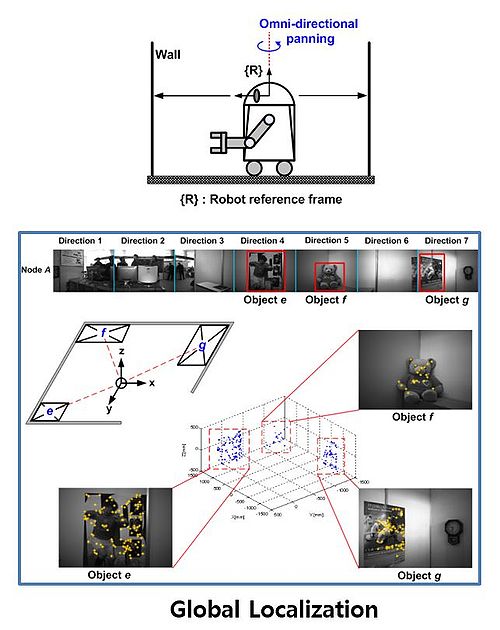

- Our global localization is based on object recognition and its pose relationship, and the local localization uses 2D-contour matching by 2D laser scanning data.

- Our map representation is like this:

- The Object-based global localization is as follows:

Related papers

- S Park, Sung-Kee Park, "Global localization for mobile robots using reference scan matching," International Journal of Control, Automation and Systems 12 (1), 156-168, 2014.

- S Kim, H Cheong, DH Kim, Sung-Kee Park, "Context-based object recognition for door detection," Advanced Robotics (ICAR), 2011 15th International Conference on, 155-160, 2011.

- S Park, Sung-Kee Park, "pectral scan matching and its application to global localization for mobile robots," Robotics and Automation (ICRA), 2010 IEEE International Conference on, 1361-1366. 2010.

- S Park, S Kim, M Park, Sung-Kee Park, "Vision-based global localization for mobile robots with hybrid maps of objects and spatial layouts," Information Sciences 179 (24), 4174-4198, 2009.

Unknown Objects Grasping

Concept

- With a stereo vision(passive 3D sensor) and a Jaw-type hand, we studied a method for any unknown object grasping.

- In the context of perception with only one-shot 3D image, three graspable directions such as lift-up, side and frontal direction are suggested, and an affordance-based grasp, handle graspable, is also proposed.

- Our experimental movie clip : https://www.youtube.com/watch?v=YVfTltLy2w0

- Our grasp directions are as follows:

- The schema of our whole grasping process is like this:

Related papers

- RK Ala, DH Kim, SY Shin, CH Kim, Sung-Kee Park, A 3D-grasp synthesis algorithm to grasp unknown objects based on graspable boundary and convex segments," Information Sciences 295, 91-106, 2015