Difference between revisions of "3W for HRI"

From Robot Intelligence

(→Results) |

|||

| Line 6: | Line 6: | ||

*Members: [[Sung-Kee Park | Sung-Kee Park, Ph.D. ]], [[Yoonseob Lim | Yoonseob Lim, Ph.D. ]], [[Sang-Seok Yun | Sang-Seok Yun, Ph.D.]], [[Junghoon Kim | Junghoon Kim, M.S.]], [[Hoang Minh Do | Hoang Minh Do(UST)]], [[Donghui Song | Donghui Song(UST)]], [[Hyeonuk Bhin | Hyeonuk Bhin(UST)]], [[Gyeore Lee | Gyeore Lee(UST)]], | *Members: [[Sung-Kee Park | Sung-Kee Park, Ph.D. ]], [[Yoonseob Lim | Yoonseob Lim, Ph.D. ]], [[Sang-Seok Yun | Sang-Seok Yun, Ph.D.]], [[Junghoon Kim | Junghoon Kim, M.S.]], [[Hoang Minh Do | Hoang Minh Do(UST)]], [[Donghui Song | Donghui Song(UST)]], [[Hyeonuk Bhin | Hyeonuk Bhin(UST)]], [[Gyeore Lee | Gyeore Lee(UST)]], | ||

| + | |||

==Introduction and Research Targets== | ==Introduction and Research Targets== | ||

| Line 16: | Line 17: | ||

::[[File:Simonpic_overview.png|800px|left]] <br/> <br/> <br/> <br/> <br/> <br/><br/> <br/> <br/> <br/> <br/> <br/> | ::[[File:Simonpic_overview.png|800px|left]] <br/> <br/> <br/> <br/> <br/> <br/><br/> <br/> <br/> <br/> <br/> <br/> | ||

<br/> <br/> <br/> <br/> <br/> <br/><br/> <br/> <br/> <br/> <br/> | <br/> <br/> <br/> <br/> <br/> <br/><br/> <br/> <br/> <br/> <br/> | ||

| + | |||

| + | |||

| + | ==Developing Core Technologies== | ||

| + | <br><b>DETECTION</b> | ||

| + | *<b>WHERE</b>: Human detection and localization | ||

| + | *<b>WHO</b>: Face recognition and ID tracking | ||

| + | *<b>WHAT</b>: Recognition of individual and group behavior | ||

| + | *3W data association on the perception sensor network | ||

| + | |||

| + | <br><b>AUTOMATIC SURVEILLANCE</b> | ||

| + | *Automatic message transmission for human-caused emergencies | ||

| + | *Analysis of student attitude | ||

| + | *Remote monitoring via Web technologies and stream server | ||

| + | |||

| + | <br><b>ROBOT REACTION</b> | ||

| + | *Human-friendly robot behavior | ||

| + | *Gaze control and robot navigation | ||

| + | *Human following with recovery mechanism | ||

| + | |||

==Results== | ==Results== | ||

| Line 37: | Line 57: | ||

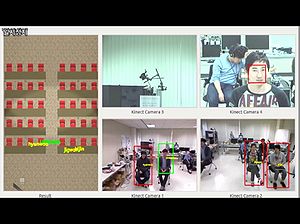

* [https://youtu.be/kyu7psC4IG0-_I 3D simulation of 3W Fusion] | * [https://youtu.be/kyu7psC4IG0-_I 3D simulation of 3W Fusion] | ||

::[[File:(SimonPiC2)_3W FUSION Simulation.jpg|300px|left]] <br/> <br/> <br/> <br/> <br/> <br/><br/> <br/> <br/> <br/> <br/> | ::[[File:(SimonPiC2)_3W FUSION Simulation.jpg|300px|left]] <br/> <br/> <br/> <br/> <br/> <br/><br/> <br/> <br/> <br/> <br/> | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

Latest revision as of 18:45, 22 October 2017

Contents

Project Outline

- Research Period: 2012.6 ~ 2017.5

- Funded by the Ministry of Trade, Industry and Energy (Grant No: 10041629)

- Members: Sung-Kee Park, Ph.D. , Yoonseob Lim, Ph.D. , Sang-Seok Yun, Ph.D., Junghoon Kim, M.S., Hoang Minh Do(UST), Donghui Song(UST), Hyeonuk Bhin(UST), Gyeore Lee(UST),

Introduction and Research Targets

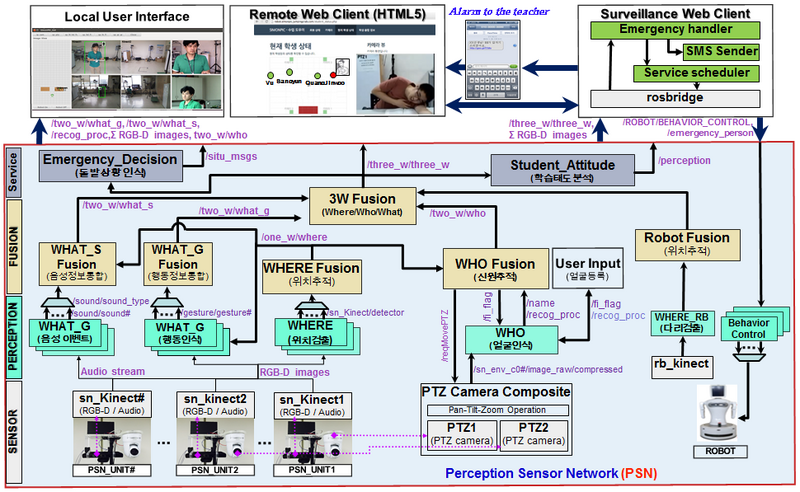

1. This project is for the purpose of the implementation of technologies for identification (WHO), behavior (WHAT) and location (WHERE) of human based sensor network fusion program.

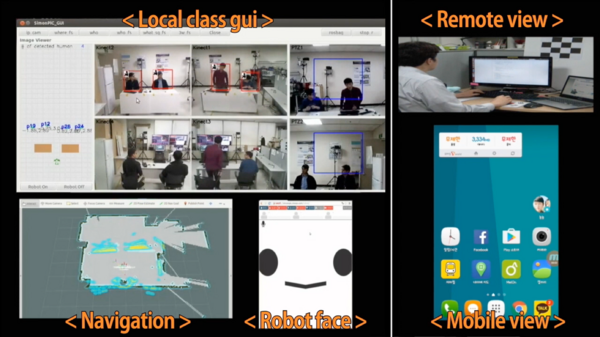

2. In this project we develop a robot-assisted management system for promptly coping with abnormal events in classroom environments.

- Reliably detect the occurrence of human-caused emergency situations via audio-visual perception modules - Make an urgent SMS transmission to let someone know that an emergency event occurs, and relay spot information to them - Perform an immediate reaction for the happening by using robot navigation and interaction technologies on behlaf of the remote user

Developing Core Technologies

DETECTION

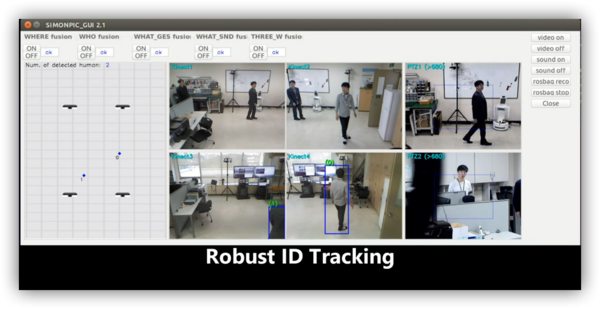

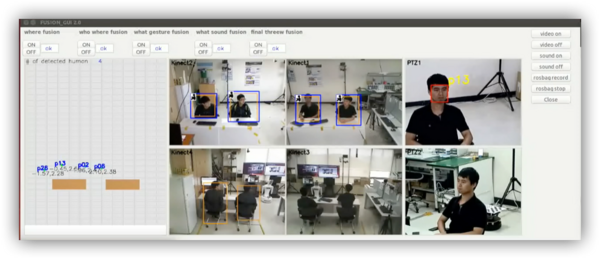

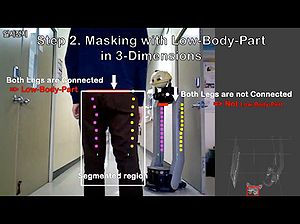

- WHERE: Human detection and localization

- WHO: Face recognition and ID tracking

- WHAT: Recognition of individual and group behavior

- 3W data association on the perception sensor network

AUTOMATIC SURVEILLANCE

- Automatic message transmission for human-caused emergencies

- Analysis of student attitude

- Remote monitoring via Web technologies and stream server

ROBOT REACTION

- Human-friendly robot behavior

- Gaze control and robot navigation

- Human following with recovery mechanism

Results